About

The new millennium has introduced increased pressure for finding new renewable energy sources. The exponential increase in population has led to the global crisis such as global warming, environmental pollution and change and rapid decrease of fossil reservoirs. Also the demand of electric power increases at a much higher pace than other energy demands as the world is industrialized and computerized. Under these circumstances, research has been carried out to look into the possibility of building a power station in space to transmit electricity to Earth by way of radio waves-the Solar Power Satellites. Solar Power Satellites(SPS) converts solar energy in to micro waves and sends that microwaves in to a beam to a receiving antenna on the Earth for conversion to ordinary electricity.SPS is a clean, large-scale, stable electric power source. Solar Power Satellites is known by a variety of other names such as Satellite Power System, Space Power Station, Space Power System, Solar Power Station, Space Solar Power Station etc.

Klystron

Here a high velocity electron beam is formed, focused and send down a glass tube to a collector electrode which is at high positive potential with respect to the cathode. As the electron beam having constant velocity approaches gap A, they are velocity modulated by the RF voltage existing across this gap. Thus as the beam progress further down the drift tube, bunching of electrons takes place. Eventually the current pass the catcher gap in quite pronounce bunches and therefore varies cyclically with time. This variation in current enables the klystron to have significant gain. Thus the catcher cavity is excited into oscillations at its resonant frequency and a large output is obtained.

SPS, as mentioned before is massive and because of their size they should have been constructed in space. Recent work also indicate that this unconventional but scientifically well –based approach should permit the production of power satellite without the need for any rocket vehicle more advanced than the existing ones. The plan envisioned sending small segments of the satellites into space using the space shuttle. The projected cost of a SPS could be considerably reduced if extraterrestrial resources are employed in the construction.One often discussed road to lunar resource utilization is to start with mining and refining of lunar oxygen, the most abundant element in the Moon’s crust, for use as a component of rocket fuel to support lunar base as well as exploration mission. The aluminum and silicon can be refined to produce solar arrays.

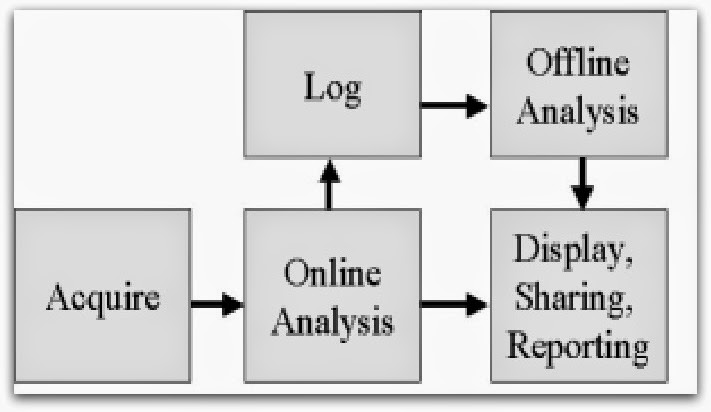

Beam Control

A key system and safety aspect of WPT in its ability to control the power beam. Retro directive beam control systems have been the preferred method of achieving accurate beam pointing. As shown in fig. a coded pilot signal is emitted from the rectenna towards the SPS transmitter to provide a phase reference for forming and pointing the power beams. To form the power beam and point it back forwards the rectenna, the phase of the pilot signal is captured by the receiver located at each sub array is compared to an onboard reference frequency distributed equally throughout the array. If a phase difference exists between the two signals, the received signal is phase conjugated and fed back to earth dc-RF converted. In the absence of the pilot signal, the transmitter will automatically dephase its power beam, and the peak power density decreases by the ratio of the number of transmitter elements.

Rectenna

Rectenna is the microwave to dc converting device and is mainly composed of a receiving antenna and a rectifying circuit. Fig .8 shows the schematic of rectenna circuit . It consists of a receiving antenna, an input low pass filter, a rectifying circuit and an output smoothing filter. The input filter is needed to suppress re radiation of high harmonics that are generated by the non linear characteristics of rectifying circuit. Because it is a highly non linear circuit, harmonic power levels must be suppressed. One method of suppressing harmonics is by placing a frequency selective surface in front of the rectenna circuit that passes the operating frequency and attenuates the harmonics.

Conclusion

The SPS will be a central attraction of space and energy technology in coming decades. However, large scale retro directive power transmission has not yet been proven and needs further development. Another important area of technological development will be the reduction of the size and weight of individual elements in the space section of SPS. Large-scale transportation and robotics for the construction of large-scale structures in space include the other major fields of technologies requiring further developments. Technical hurdles will be removed in the coming one or two decades.